Statement

In the current system, Airflow UI is accessible to everyone and in turn it is very difficult to track any action (mainly write transactions) performed through UI. There is a high probably of messing with the system in case workflows are triggered/ deleted through UI only. So then we have realised a need of authenticate the UI through ldap. This mechanism will authenticate the user’s credentials against a LDAP server. Apache Airflow introduced a role-based access control (RBAC) feature in 1.10. This feature built the foundation to improve Airflow UI security. However, the RBAC feature comes with its own limitations as it only supports five static role types.

Pre-requisites

We need the details of LDAP server where we need one account to use as the bind account. In this, I am using my ldap credentials for the binding purpose. You need to create the proxy user to perform this action. Moreover, I am using the below LDAP Server settings –

| KEY |

VALUE |

Comments |

| AUTH_LDAP_SERVER |

ldaps://ldap.xxx.yyy.net:636

|

Create your own LDAP server. |

| AUTH_LDAP_SEARCH |

ou=Users,o=corp

|

|

| AUTH_LDAP_BIND_USER |

cn=<proxy_user>,ou=Users,o=corp

|

Create the proxy user instead of using any personal user. |

| AUTH_LDAP_BIND_PASSWORD |

‘REPLACE_YOUR_LDAP_PASSWORD’ |

It’ll be replace by proxy user’s password. |

| AUTH_LDAP_UID_FIELD |

uid |

|

| AUTH_LDAP_ALLOW_SELF_SIGNED |

True

|

Make it false in case we use ldap server’s certificate. |

| AUTH_LDAP_USE_TLS |

False |

|

| AUTH_LDAP_TLS_CACERTFILE |

/etc/ssl/certs/ldap_ca.crt

|

Refer this to create self signed certificate. |

Changes required at application side

- To turn on LDAP authentication firstly modify airflow.cfg to remove the existing LDAP configuration, if it exists. This can be done by simply removing the values to the right of the equal sign under [ldap] in the airflow.cfg configuration file. Alternately, the [ldap] section can be removed.

- Next, modify airflow.cfg to add rbac = true and to remove ‘authentication = True’, under the [webserver] section (We can control this through env variable (

AIRFLOW__WEBSERVER__RBAC=true). Also, remove the authentication backend line, if it exists.

- And finally, create a webserver_config.py file in the AIRFLOW_HOME directory (this is where airflow.cfg is also located) and it has all the LDP credentials.

import os

from airflow import configuration as conf

from flask_appbuilder.security.manager import AUTH_LDAP

basedir = os.path.abspath(os.path.dirname(__file__))

SQLALCHEMY_DATABASE_URI = conf.get(‘core’, ‘SQL_ALCHEMY_CONN’)

CSRF_ENABLED = True

AUTH_TYPE = AUTH_LDAP

AUTH_ROLE_ADMIN = ‘Admin’

AUTH_USER_REGISTRATION = True

AUTH_USER_REGISTRATION_ROLE = “Admin”

AUTH_LDAP_SERVER = ‘ldaps://ldap.xxx.yyy.net:636‘

AUTH_LDAP_SEARCH = “ou=Users,o=corp”

AUTH_LDAP_BIND_USER = ‘cn=ldap-proxy,ou=Users,o=corp’

AUTH_LDAP_BIND_PASSWORD = ‘YOUR_PASSWORD’

AUTH_LDAP_UID_FIELD = ‘uid’

AUTH_LDAP_USE_TLS = False

AUTH_LDAP_ALLOW_SELF_SIGNED = False

AUTH_LDAP_TLS_CACERTFILE = ‘/etc/ssl/certs/ldap.crt’

- Note that this requires a valid CA certificate in the location specified to verify the SSL certificate given by LDAP server. We can also use the self signed certificate and for that we need to use the above mentioned setting AUTH_LDAP_ALLOW_SELF_SIGNED as TRUE in pre-requisites.

RBAC UI Security

Prior to 1.10, Airflow was built upon the flask-admin framework, which did not provide any access control functionality. In 1.10, Airflow switched over to Flask-Appbuilder (FAB), which provided the necessary security features to support RBAC. Security of Airflow Webserver UI when running with rbac=True in the config is handled by Flask AppBuilder (FAB). Airflow’s DAG level access feature was introduced in Airflow 1.10.2 with additional enhancement in 1.10.3.

FAB Internals

FAB is web-based framework built on top of Flask, including security modeling, auto CRUD generation, and integration with different authentication mechanisms. It has a built-in security manager which is instantiated by the app to handle security operations.

Airflow RBAC Roles

Airflow ships with a set of roles by default: Admin, User, Op, Viewer, and Public. Only Admin users could configure/alter the permissions for other roles. But it is not recommended that Admin users alter these default roles in any way by removing or adding permissions to these roles.

Admin

Admin users have all possible permissions, including granting or revoking permissions from other users. We will be keeping this roles for few of us if required.

Public

Public users (anonymous) don’t have any permissions. These are not non authenticated users.

Viewer

Viewer users have limited viewer permissions who have read access to DAGs, but cannot modify the state of Airflow metastore. Rest of the users/clients will fall in this category.

VIEWER_PERMS = {

'menu_access',

'can_index',

'can_list',

'can_show',

'can_chart',

'can_dag_stats',

'can_dag_details',

'can_task_stats',

'can_code',

'can_log',

'can_get_logs_with_metadata',

'can_tries',

'can_graph',

'can_tree',

'can_task',

'can_task_instances',

'can_xcom',

'can_gantt',

'can_landing_times',

'can_duration',

'can_blocked',

'can_rendered',

'can_pickle_info',

'can_version',

}

on limited web views

VIEWER_VMS = {

'Airflow',

'DagModelView',

'Browse',

'DAG Runs',

'DagRunModelView',

'Task Instances',

'TaskInstanceModelView',

'SLA Misses',

'SlaMissModelView',

'Jobs',

'JobModelView',

'Logs',

'LogModelView',

'Docs',

'Documentation',

'GitHub',

'About',

'Version',

'VersionView',

}

User

User users have Viewer permissions plus additional user permissions

USER_PERMS = {

'can_dagrun_clear',

'can_run',

'can_trigger',

'can_add',

'can_edit',

'can_delete',

'can_paused',

'can_refresh',

'can_success',

'muldelete',

'set_failed',

'set_running',

'set_success',

'clear',

'can_clear',

}

on User web views which is the same as Viewer web views.

Op

Op users have User permissions plus additional op permissions

OP_PERMS = {

'can_conf',

'can_varimport',

}

on User web views plus these additional op web views

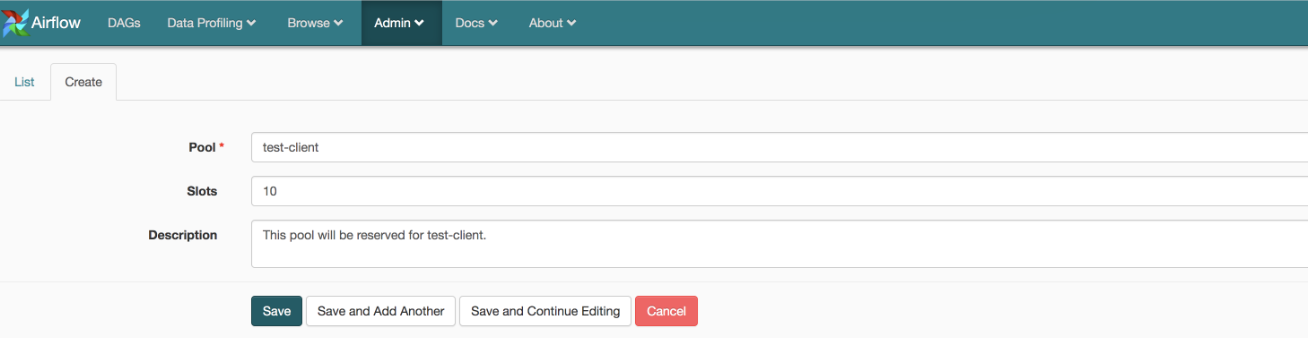

OP_VMS = {

'Admin',

'Configurations',

'ConfigurationView',

'Connections',

'ConnectionModelView',

'Pools',

'PoolModelView',

'Variables',

'VariableModelView',

'XComs',

'XComModelView',

}

Custom Roles

DAG Level Role

Admin can create a set of roles which are only allowed to view a certain set of dags. This is called DAG level access. Each dag defined in the dag model table is treated as a View which has two permissions associated with it (can_dag_read and can_dag_edit). There is a special view called all_dags which allows the role to access all the dags. The default Admin, Viewer, User, Oproles can all access all_dags view. We need to investigate to how to integrate thisb feature into the application.

Note* I have tested this with Airflow 1.10.2, 1.10.5 and 1.106 and this is working perfectly.